Who is it? I'm not sure, but I'm somewhere up there in the top 1% and that lets me charge $200/hr for my time. So why do I say I'm in the top 1%? Because I have this:

What is it you ask? It's MY code gen tool. It is the culmination of 10 years of development projects combined into a massive code generation platform. Some of you know I am one of the architects behind the Quiznos, Boston Market, Panda Express…etc, etc, etc gift and loyalty platform. It processes millions of transactions every day and has some very cool architectural components very similar to Visa and Discover networks (but a lot better). Some of you might also know that I worked at Avanade when they FULLY owned Enterprise Library (no MS patterns and practices didn't exist before Avanade created it) and had built the first TRUE SOA framework for .NET called ACA.NET. Through large projects like these, I have evolved the tool to generate the many different layers that one would ever want to have in a data-driven platform.

So back to SharePoint, what have I done with the tool and SharePoint? Being a top selling author of SharePoint courseware, I kinda know what people are doing day in and day out. Taking that information, I have automated those tasks in my code gen tool. Let me demonstrate!

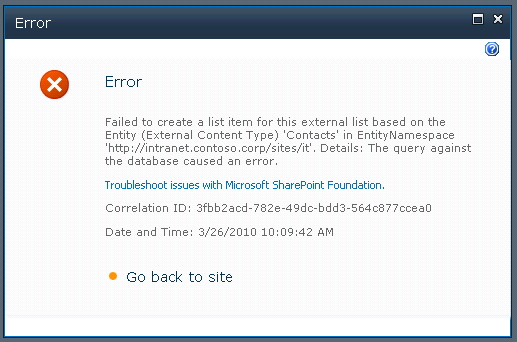

Many of you have seen my previous blog post on BCS/BDC Report Card. The problem with BCS/BDC is that tables that have relationships don't work very well when WRITING data. So what are our options?

- Custom Web Application in layouts directory

- Custom Web Parts

The first option isn't very flexible and you end up having to implement you own security, so option #2 becomes a more preferred method when taking advantage of SharePoint's simple web part page setup and security standpoint.

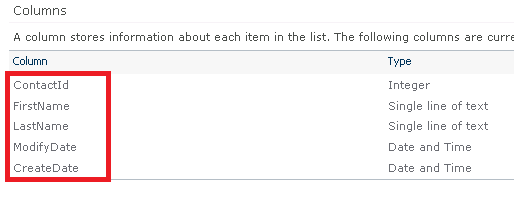

Let's take the table example from my last post:

CREATE TABLE [dbo].[Contact](

[ContactId] [int] NOT NULL,

[FirstName] [varchar](50) NOT NULL,

[LastName] [varchar](50) NOT NULL,

[StatusId] [int] NOT NULL,

[ModifyDate] [datetime] NOT NULL,

[CreateDate] [datetime] NOT NULL

)

and the related table:

CREATE TABLE [dbo].[Status](

[StatusId] [int] NOT NULL,

[ShortName] [varchar](50) NOT NULL,

[LongName] [varchar](50) NOT NULL,

[ModifyDate] [datetime] NOT NULL,

[CreateDate] [datetime] NOT NULL

)

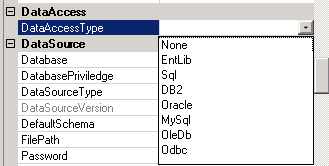

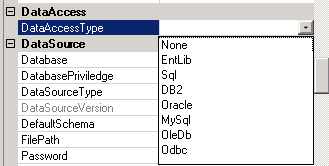

For now, I'm not going to implement the relationship in the database and mimic where BCS functionality maxes out at. First step is to point the tool at the database. Note that I can support the following data sources:

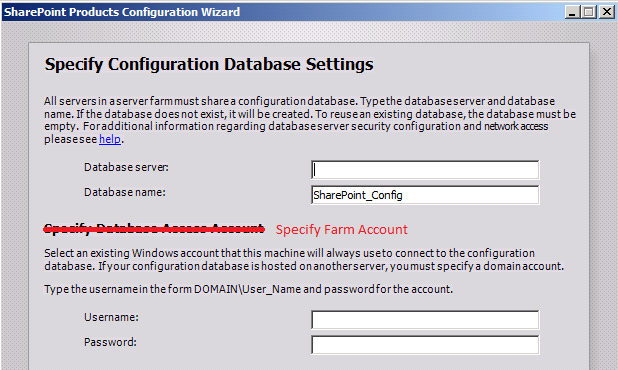

For now, I'm simply using SQL Server. But using any of the above I could generate the same set of code base. Other things I need to do is set the output path for my generated code and the namespace of the code that will come out the other side. I will discuss some of the other things that happen automatically when a new configuration is created (which are important for SharePoint steps later on).

The next step is to generate the table and relationship configurations. It's a super advanced topic (likely the discussion of a Computer Science thesis paper in massively scalable system generation techniques) to go into what really is happening and all the possible overrides, just know that I have solved many of the typical code generation problems that typical tools can't overcome.

Notice that I too, like BDCMetaman, generate my own BDC app def files. For the lasts step I simply had to generate these (it did it automatically) so the actual core knows what type of code to generate. It would give me an error if it finds a table that doesn't have a configuration during runtime.

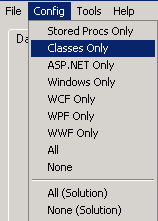

As with any good codegen tool, it has to generate your data access layer. So, let's do that, note all the options I can choose from:

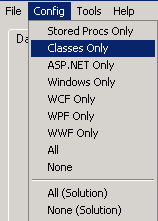

Enterprise library is the MOST flexible (RUNTIME switching of the back end data source), so we'll use that. We have all the basic entered. Time to generate some code! First step is to generate the Base classes and class managers. With a single of a button, I will generate several millions of lines of code. And after the 5 year of building this tool, I realized that all the things I have built take a LONG time to generate. So I built a nice configuration selector:

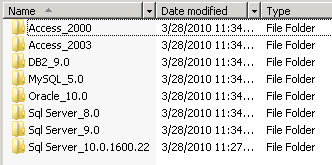

So, selecting "Classes Only", I can generate the class manager and base classes. But also note that this step sets up the massive directory structure of possibilites for code that could have been generated:

Now simply generating the .cs files really doesn't help me much, so I needed the codegen tool to generate the Visual Studio project files too. So clicking the "All (Solution)" configuration option, I am able to generate these wrapper projects (only 2005 and 2008, haven't updated for 2010 until it RTMs), after clicking the upgrade in 2010, I have a non-compiling project will all files included in the project:

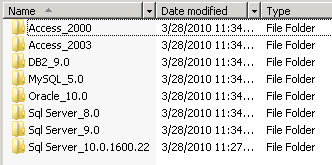

Why doesn't it compile? I didn't generate the Data Access layer. I have to generate the Stored procedures for the tables to support the class manager. Going back into the tool I change the config to Stored procedures, push a button and get a full set of SPs for multipe different platforms:

Executing the SP script I get a set of CRUD+4 SPs generated that is supported by my class manager (and every other architectural layer that I generate). Clicking the Database Interface button I'm able to generate the Database interface class that allows me to do all kinds of fancy things around transactional support. Now my project will compile.

Amazingly enough, all that is pretty basic for a codegen tool and I have kept this as simple as possible for the reader base. There are several other things that I didn't go into and won't go into (to keep my competitive advantage over my competition) that you'll never find in other tools.

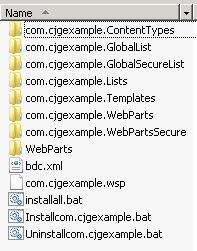

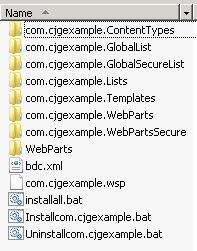

At this point, your asking, "So, what about SharePoint?". Ok, let's do it. Now that I have the basics in place. Let's look at what gets generated for SharePoint. I don't have a configuration menu item for SharePoint so it's a manual setup in the configuration utility to enable all the codegen points, but luckily its only a couple of properties. Regenerating, I will get the following created in the SharePoint directory:

These are exactly what you think. Autogenerated eventreceivers for lists and web parts. By why two web parts? Webparts and webparts secure? WebParts are designed to be admin based web parts, no security around what you can do to the base data, whereas the secure ones, care about who YOU are and limit you to what you can see in the database.

In the SharePoint2007 folder, I get this:

<

p>What is all the stuff? This is the yummy SharePoint part of the tool, these are all Features and do the following:

- Content Types = a content type that matches exactly the attribute in the "data source"

- GlobalList/GlobalListSecure = a list with a page that maps to each web part (eliminates the manual adding to lists, which sucks when you have 100's of tables)

- Lists = a list that uses the content type to create a new instance of an exact copy of the data source. This is used to migrating back end data to SharePoint

- WebParts/WebPartsSecure = a set of DWP files of all web parts generated to allow you to add to any pages

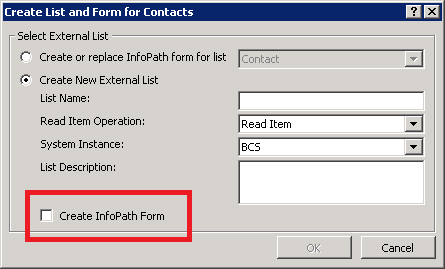

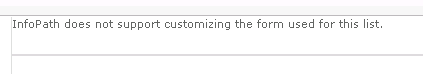

- bdc.xml = a similar file you would get from BDCMetaman or the SharePoint SDK tool

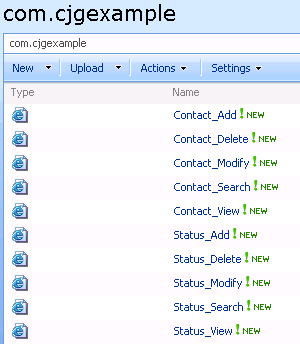

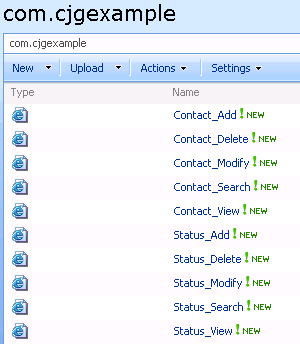

So let's put it all together shall we? I compile all the necessary projects, add the assemblies to the GAC, add the safe controls and install and activate the the Global List feature. This is what I will get in SharePoint:

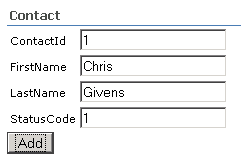

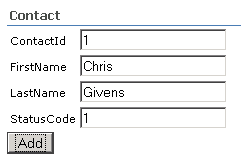

Clicking on the contact_Add.aspx page gives us the following:

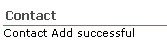

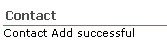

Clicking Add gives us this:

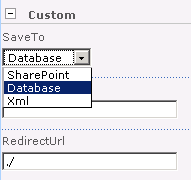

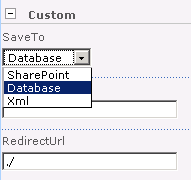

What does that mean? It means by default the web part saved the data to the database. If I had so chosen, I could also have told it to save to SharePoint (using the list feature generated previously) or to XML. On success I could even have it redirect to some page:

Now, the web part itself has two ways to be generated. With everything in childcontrols or using a UserControl. Since most customers don't really like my ugly basic table (with no CSS elements for customization), they choose the user controls feature. So, with a couple of changes, I can tell it to skip any table building and load up a user control from the controltemplates directory. This then allows the customer to change the way the web parts looks however they want!

So, to quote a couple of movies, "whoop de do Basal, what does it all mean?". Back to the problem at hand, relational tables inside a database. The thing BCS can't handle currently. Let's check it out.

First thing to do is add the reference in the database. From there I then have to refresh a Relationship Property table in the tool. This basically say how I want things to be generated from a relationship standpoint (both incoming and outgoing). I simply set some configuration settings (one to start using the user control rather than internal child control magic) regenerate the code with a couple clicks of a button, redeploy my web parts and I now have this:

Notice that I have a drop down for my relationship. If I had set the contactid to an identity key, it would not be in this web part and therefore the user wouldn't have to type it in. Clicking "Add contact" will again add a contact to the database. Of course, being that the items in the database are set to "not null", if I had not filled in anything, I would get this:

To keep this short, all the web parts work. If security was needed, I would use the Secure version of the web parts and then make calls using the current user's identity. All generated from pretty much any data source you could imagine.

Even though it took a lot longer to build (and read) this blog, if I had skipped the blog, this all took about 10 minutes to setup and deploy (no matter how many entities was in the data source). Which by the way, Microsoft Entity Framework == Boring…there is so much missing from that framework and hence why I built mine years before MS did.

If I get lots of questions and request to see more, I might do another more in depth blog on some of the rules around the generation.

Chris