First and foremost, I LOVE SHAREPOINT. It's awesome, it pays my bills and IT ROCKS. I give credit where credit is due, but I'm also fair in that I will point out flaws too. As some of you surely remember, I tweeted a little rant about BCS and how annoyed I was that customer after customer keeps asking me to make BCS do something it just can't do (point proven by the tweet I got from Paul Andrew "BCS is not a silver bullet", of which I replied, "It's a marketing problem", driven by conference presenters saying its the save all solution of the century). A lot of people feel that because it can now both READ and WRITE data that you can DO ANYTHING with it. WRONG. So very wrong. In an effort to prove that point, very simply here's my BCS Report Card (2007 scores in parenthesis):

Read Data A+ (B)

Search Data B- (B-)

Write Data D (I)

Why you say? Why not A's across the board? Let me explain it too you. Let's start with a very simple table:

CREATE TABLE [dbo].[Contact](

[FirstName] [varchar](50) NOT NULL,

[LastName] [varchar](50) NOT NULL,

[ModifyDate] [datetime] NOT NULL,

[CreateDate] [datetime] NOT NULL

)

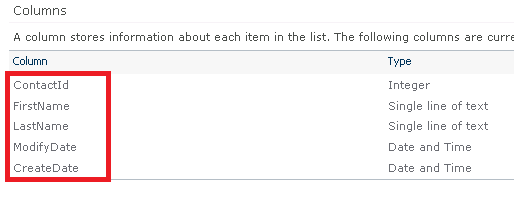

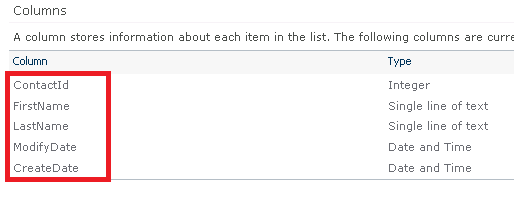

Using BCS, I can point to this data, read it, NOT search it and write it. Why can't I search it? I didn't give it a identifier/primary key. That means I can't point BCS at repeated non-unique data for searching and indexing, and hence why it gets a B-. Adding the following will make it searchable (and now usable in SharePoint Designer 2010):

ContactId int primary key

NOTE* it doesn't have to be a primary key, just need a column to be an identifier

Perfect. A few more clicks in Designer and I have an external content type with a list using it. Another couple of clicks in the Ribbon and I can even have the data syncing with my outlook (of which Fabian Williams first blogged an example of this). A few more clicks in my Search Service Application and its indexed. AWESOME! Let me repeat that…AWESOME! The problem comes into play when I add another table with a relationship:

–NEW TABLE–

StatusId int primary key

ShortName varchar(50)

LongName varchar(50)

ModifyDate datetime

CreateDate datetime

–ADD REF COLUMN TO CONTACT TABLE–

StatusId int

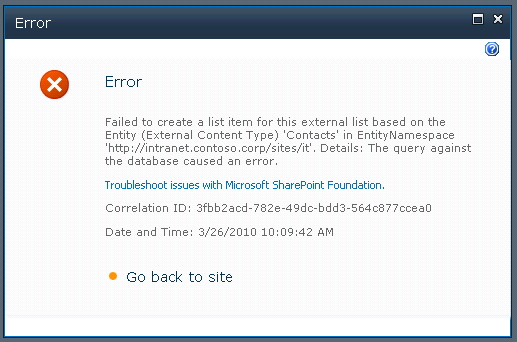

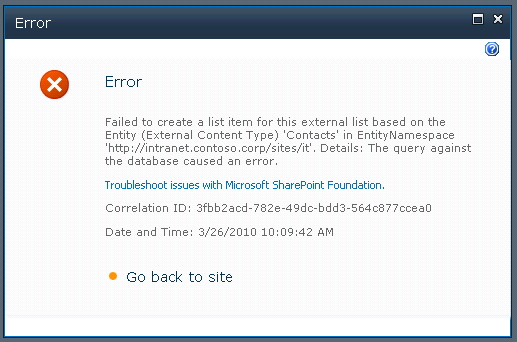

Regenerating my BCS/BDC application (which is not very friendly in Designer, but that's for another time) generates the same basic view of the contact data. However, notice that THE USER IS RESPONSIBLE for determining what the value of the StatusId column is…REALLY? REALLY? Of course if they type in something bad they get this:

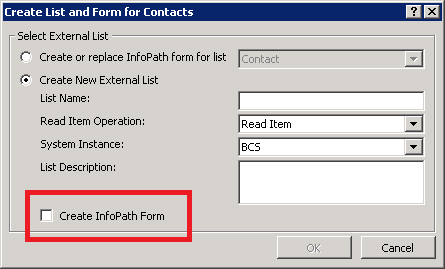

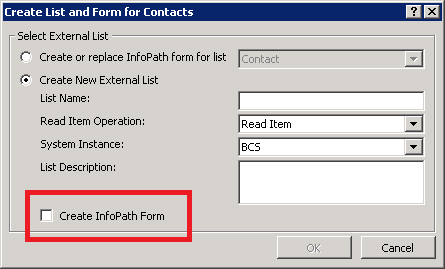

Wait, what was that checkbox on the List generation? Generate InfoPath form?

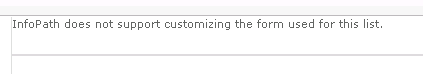

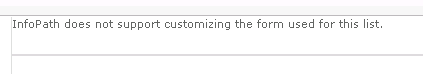

That sounds promising, but:

The point is that BCS/BDC really only works with a single non-relational entity when it comes to WRITING of data. Even given the fact that with SharePoint Designer (or manually if you are apt too because you get finally get so frustrated that you want to throw your laptop when doing it with Designer) you can create "Associations" to build relationships between similar or disparate data sources (another awesome feature), it really only helps with the BDC web parts for sending of filtering values. What does this mean?

BDC ONLY WORKS WITH DE-NORMALIZED MEANINGFUL TABLES WHEN YOU WANT FULL* FUNCTIONALITY

The asterisk on "Full" simply means, the functionality offered by BCS (ref scorecard above), not what you would REALLY want.

What do I think would be ideal? The best options is to allow us to edit the InfoPath Form!!! Then we can map the control to a drop down populated from a data source! One would think that we should be able to edit the list column and point to corresponding column in the target association table to generate a selectable drop down (or even select the column in the BCS wizard or schema), but those are locked:

OR even better, when *generating* the BCS/BDC application definition, make it possible to create Metadata "Pointers" in the metadata service to these "distinct" items and have the column use the metadata service.

But even if they (SP Product Team) go to the extreme to implement all this later (which seems inevitable as soon as they see this), "distinct" items brings in a whole different set of problems like caching and large list like issues (being they have already addressed this pattern of problem in large lists, seems trivial for them).

Why did I asterisk out "generating". BCS is a code gen tool. It generates the BDC application definition file (and some other medium difficulty plumbing) for you with a fancy wizard. Let me add, its a very BASIC codegen tool. In the post that will follow this one, I will demonstrate for the first time my CodeGen tool (with its SharePoint extensions). Watch for that post!

Some of the comments I received when I tweeted my original rant where:

- Fabian & Chakkaradeep – use a .NET type : this is why I give an A+ to READ, but still doesn't resolve the WRITE issues

- Some said use BDCMetaman, sorry it doesn't solve these issues either, its just another codegen tool like BCS

In summary, to quote a really great movie "Choose, but choose wisely" your integration points with SharePoint. In the end you may fall over dead and old before you get it working (or realize it just won't work) with BCS (at least in this version #2).

Chris